Why Look for Alternative ways to Access DeepSeek?

Due to high traffic and network attacks, the DeepSeek official website and app have been experiencing “server busy” issues recently. The service is unstable, and the API is unavailable.

Thanks to various platforms integrating DeepSeek-R1, if you’re eager to try DeepSeek, here are 15 Alternative Platforms you can try to experience DeepSeek-R1.

I have personally tested all of these Platforms and guarantee they are usable.

Let’s dive in.

What are third-party platforms that provide access to DeepSeek?

Third-party platforms like PopAi, and AI service aggregators provide alternative access to DeepSeek. These platforms offer integration with DeepSeek’s models, including the R1 and V3, ensuring users can still utilize DeepSeek’s capabilities even during server outages or limitations on DeepSeek’s primary access points.

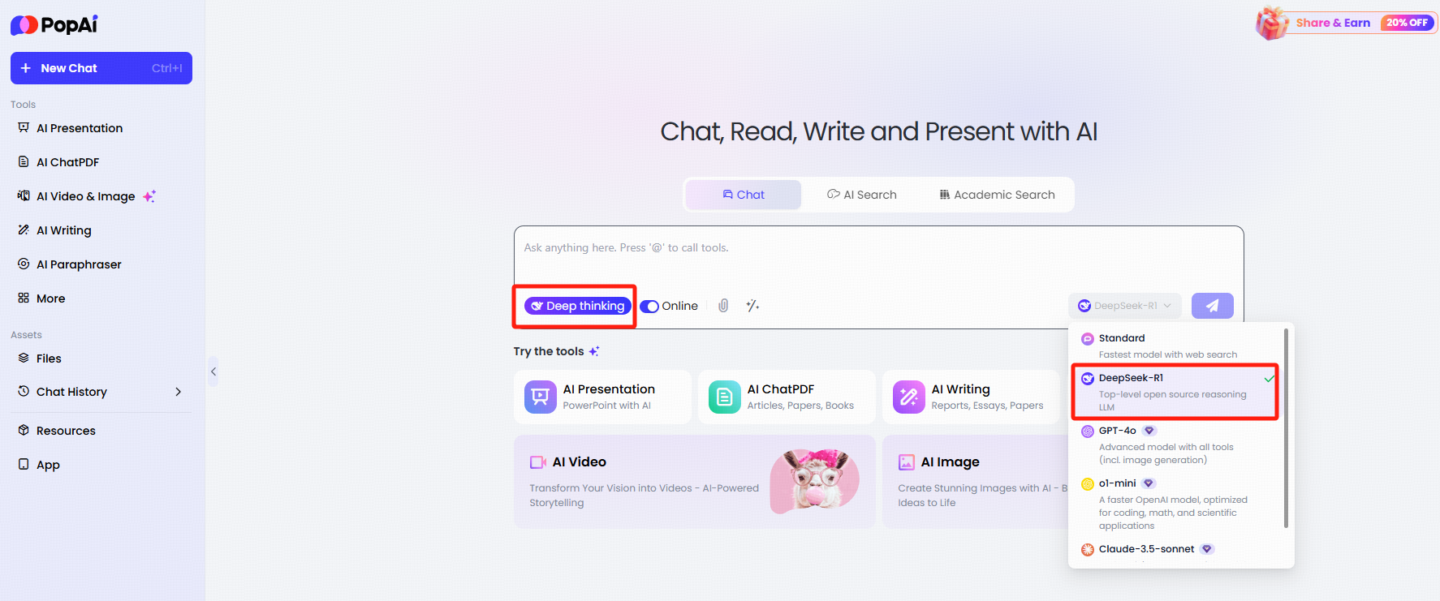

1 PopAi:The best all-around alternative platforms to DeepSeek

PopAi is an advanced AI tool that enhances productivity, offering instant answers, AI document chat, AI-generated presentations, and AI image & video generation. It has launched the DeepSeek-R1 model.

My experience with PopAi is that it feels quite smooth, and the connectivity features are well-developed.

Features

1 Many free usage attempts.

2 Smooth and seamless experience, without the hassle of server busy issues.

3 With internet connection, access to the latest and most comprehensive resources.

Steps

Visit the website, select “Deepseek-Rl” to get started.

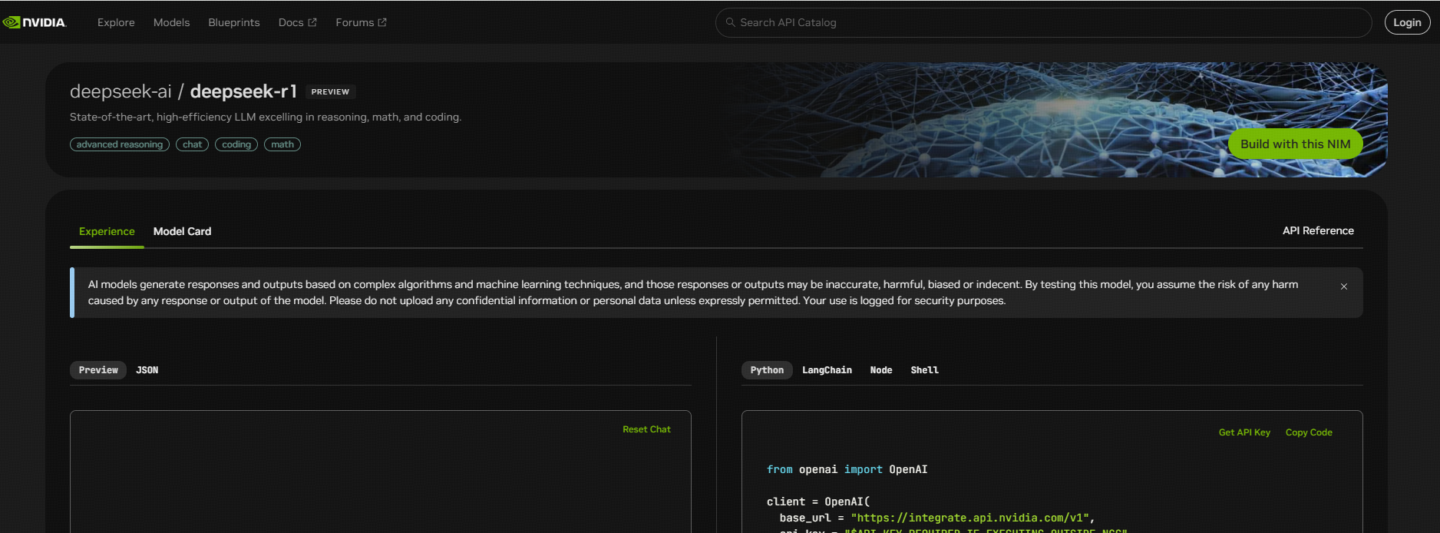

2 NVIDIA NIM Microservices: Fast DeepSeek Integration

NVIDIA has deployed the full-parameter 671B DeepSeek-R1 model, which can be directly used on the web version. Just click in to see the chat window. However, due to the increasing number of free users, NIM may experience occasional slowdowns during certain periods.

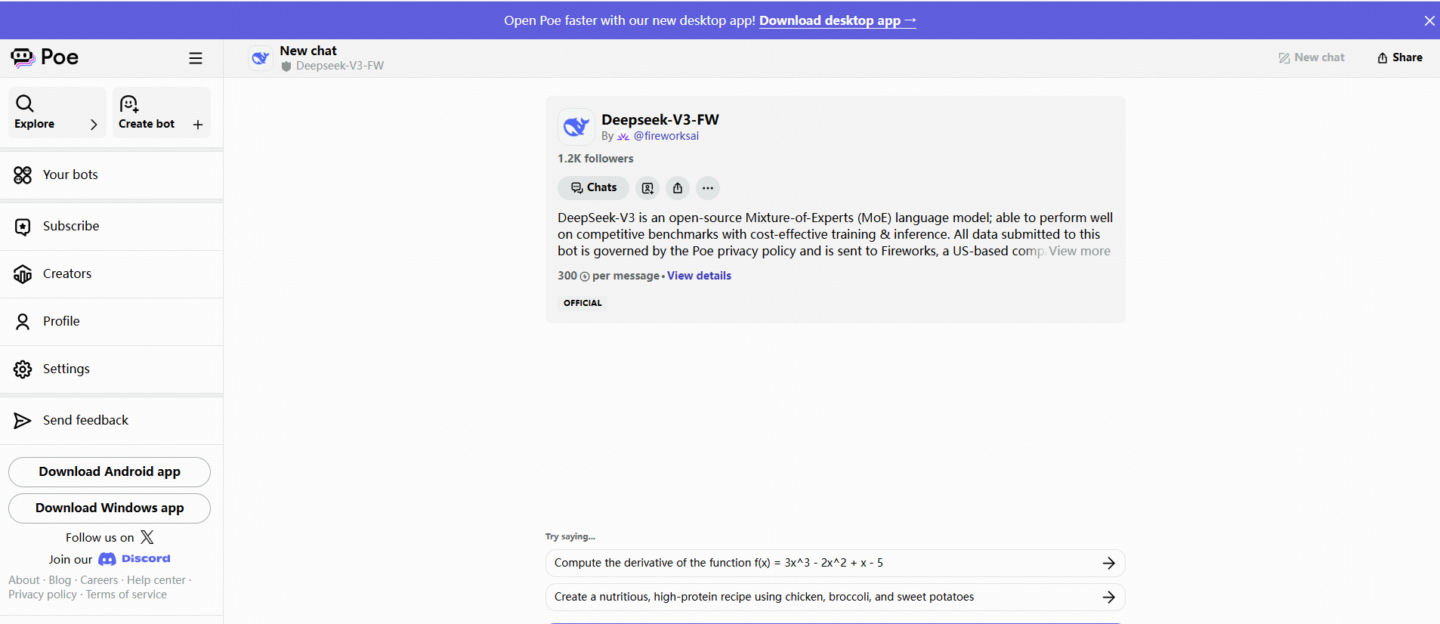

3 Poe: Popular AI Aggregation Platform integrating the DeepSeek-R1 model

Poe is the most popular AI aggregation platform, and it has recently integrated the DeepSeek-R1 model. This version of Poe performs even better than the official version, with a longer context length than the official website.

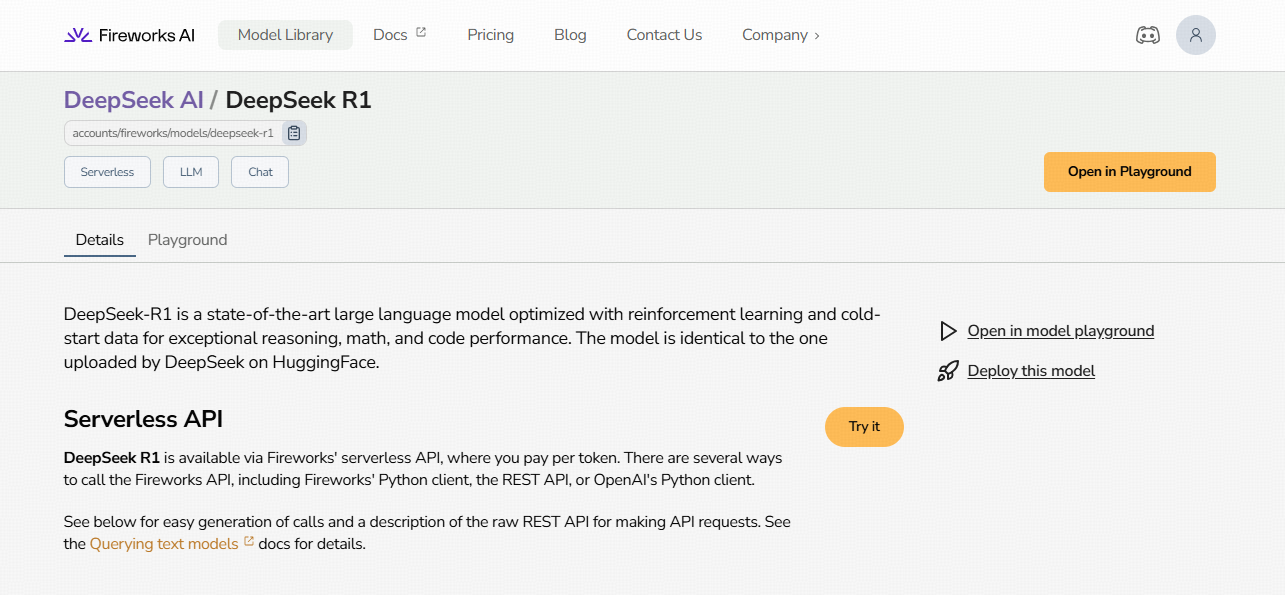

4 Fireworks AI: Professional AI Model Training Platform

Fireworks AI is a professional AI model training platform. Currently, you can select DeepSeek R1 in the Model Square and click “Try” to use it directly. However, you’ll need to register an account first to use the platform.

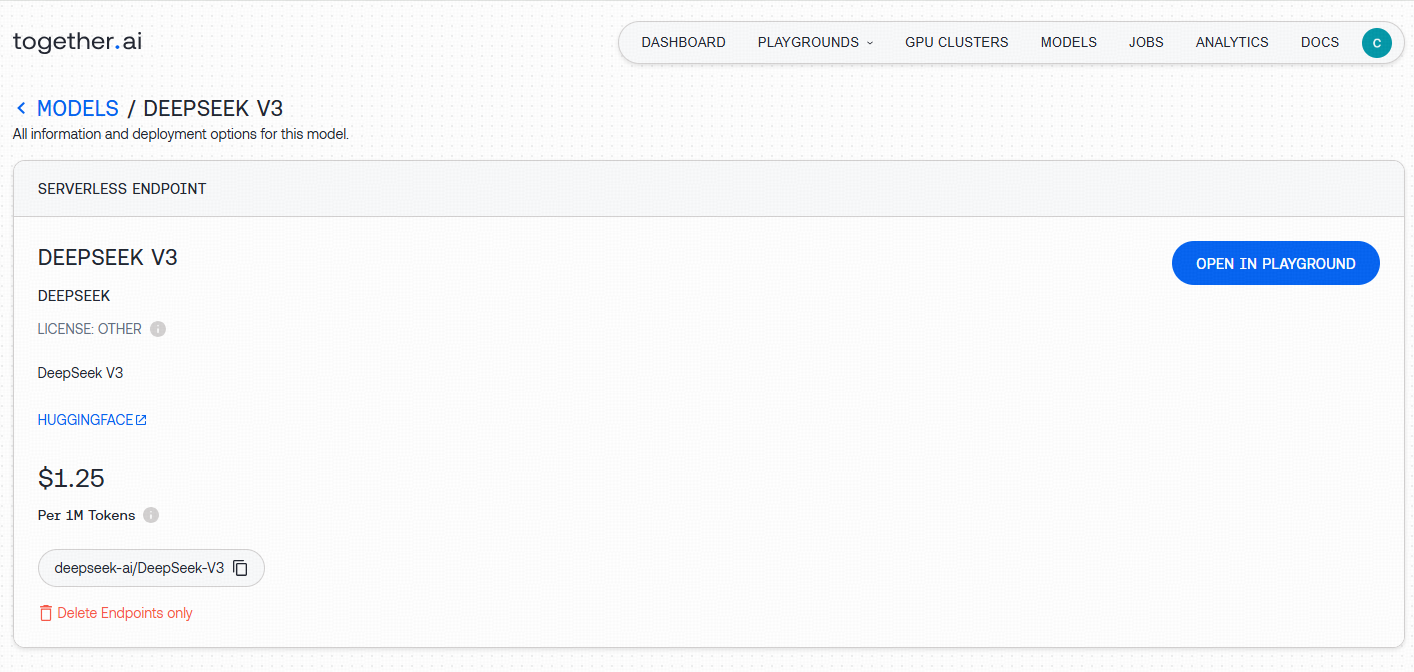

5 Together: Cloud-Based Open Source AI Platform

A cloud-based platform aimed at building open-source generative AI and developing AI models. Poe’s DeepSeek R1 is one of the models they provide.

Similarly, after logging in, you’ll need to fill out some basic account information (just randomly if you like), then select DeepSeek R1, and you’re good to go!

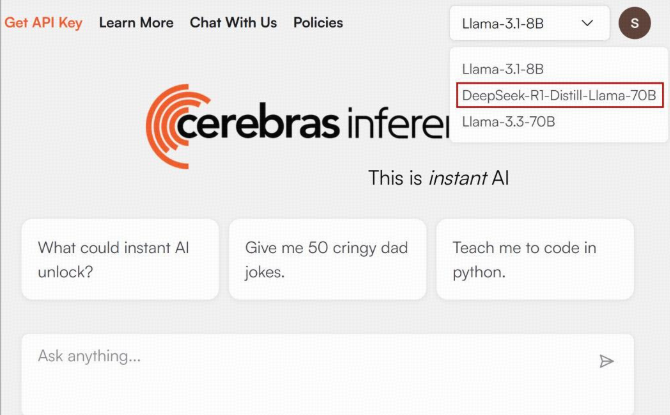

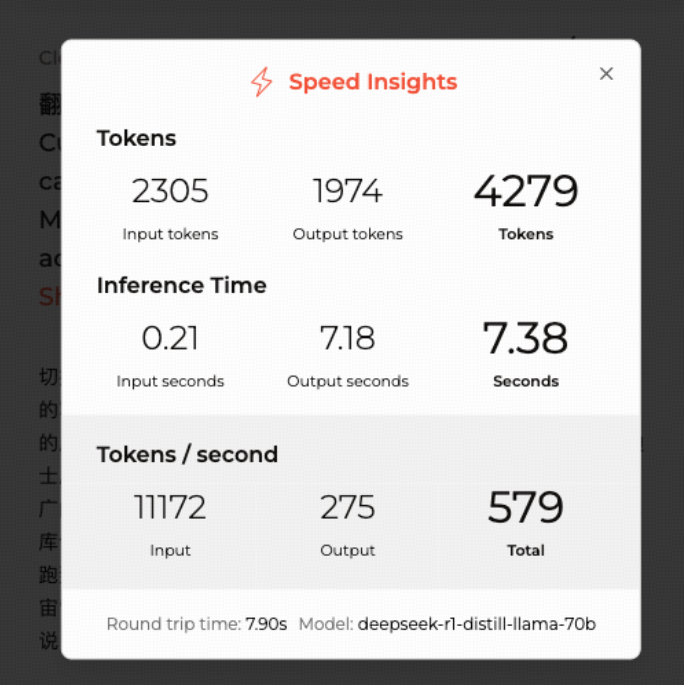

6 Cerebras: Ultra-Fast DeepSeek Model with 70B Parameters

Unlike several large platforms, Cerebras uses a 70b model and claims to be “57 times faster than GPU solutions.” Using it is simple. After registering with your email and logging in, you can select DeepSeek-R1 from the dropdown menu at the top to start using it.

The downside is that the registration process is very cumbersome.

7 Groq: Speed-focused DeepSeek Alternative

Groq is an integrated platform dedicated to collecting inference-based open-source large models, using an LPU optimized for AI language and inference, with a focus on “speed.”

Groq does not feature the full R1 model, but instead uses a distilled version with 70b, which is sufficient for general use and suitable for quick tasks like translation and simple generation.

However, Groq is not 100% stable and may experience occasional downtimes.

Features

The benefit is that it’s truly fast, extremely fast, which is one of their proudest features. They even highlight the response speed.

steps

Open the official website https://groq.com and click on the “Dev Console” at the top right to access the login page.

8 SiliconFlow: High-Traffic, Token-Based Access

A currently popular channel service, offering 20 million tokens upon registration. SiliconCloud is running a promotion, where registering with an invitation code grants 20 million tokens on both sides.

After launching the 671B full version of DeepSeek-R1 and DeepSeek-V3, SiliconFlow has recently launched 6 accelerated versions of the DeepSeek-R1 distilled model.

Features

Receive tokens upon login

Steps

After registering and logging in, select the DeepSeek model to start using.

9 Metaso: Chinese Platform with DeepSeek-R1

MetaSo currently supports DeepSeek R1 on the web version. It is a Chinese company, but unfortunately, the interface only supports Chinese. MetaSo mainly allows usage without logging in. It’s very user-friendly for now, though it’s uncertain whether they will charge in the future. For now, it’s working great.

10 Baidu Smart Cloud Qianfan Platform

Website: Baidu Smart Cloud Qianfan Platform

Features

Offers DeepSeek-R1 and DeepSeek-V3 models, with discounted prices and limited-time free access.

Steps

Log in to the Baidu Smart Cloud Qianfan Platform. In ModelBuilder, select the DeepSeek model to experience.

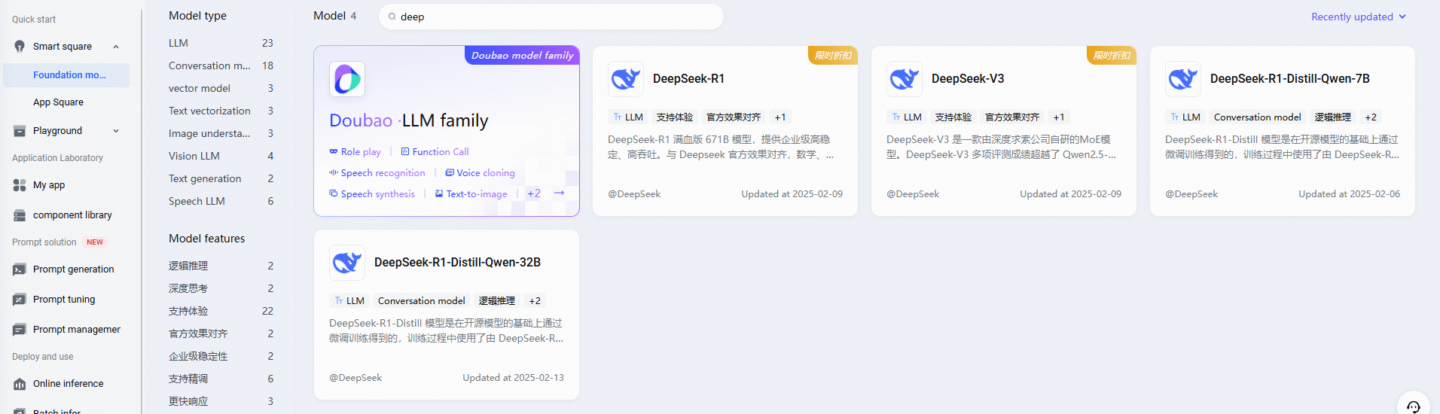

11 Volcengine: ByteDance’s AI Model Platform

ByteDance’s model aggregation platform currently also features the DeepSeek-R1 model. You can find it in the Smart Square – Model Square section.

12 Aliyun PAI Model Gallery: One-Click Deployment of DeepSeek

Website: PAI Model Gallery

Features

Supports one-click deployment of DeepSeek models.

Steps

Log in to the PAI console, select the region and workspace. In the Model Gallery, find the DeepSeek model and click to deploy.

13 Unicom Cloud: Comprehensive DeepSeek-R1 Service

Website: Unicom Cloud – Secure Smart Cloud

Features

Supports DeepSeek-R1 series models, providing comprehensive service support.

Steps

Log in to Unicom Cloud, select the DeepSeek model for deployment and use.

14 JD Cloud: Public and Private Deployment Options

Website: JD Cloud Yanxi AI Development Computing Platform

Features

Supports both public cloud and private deployment.

Steps

Log in to JD Cloud, go to the Yanxi AI Development Computing Platform. In the “AI Asset Market,” select the DeepSeek model for deployment.

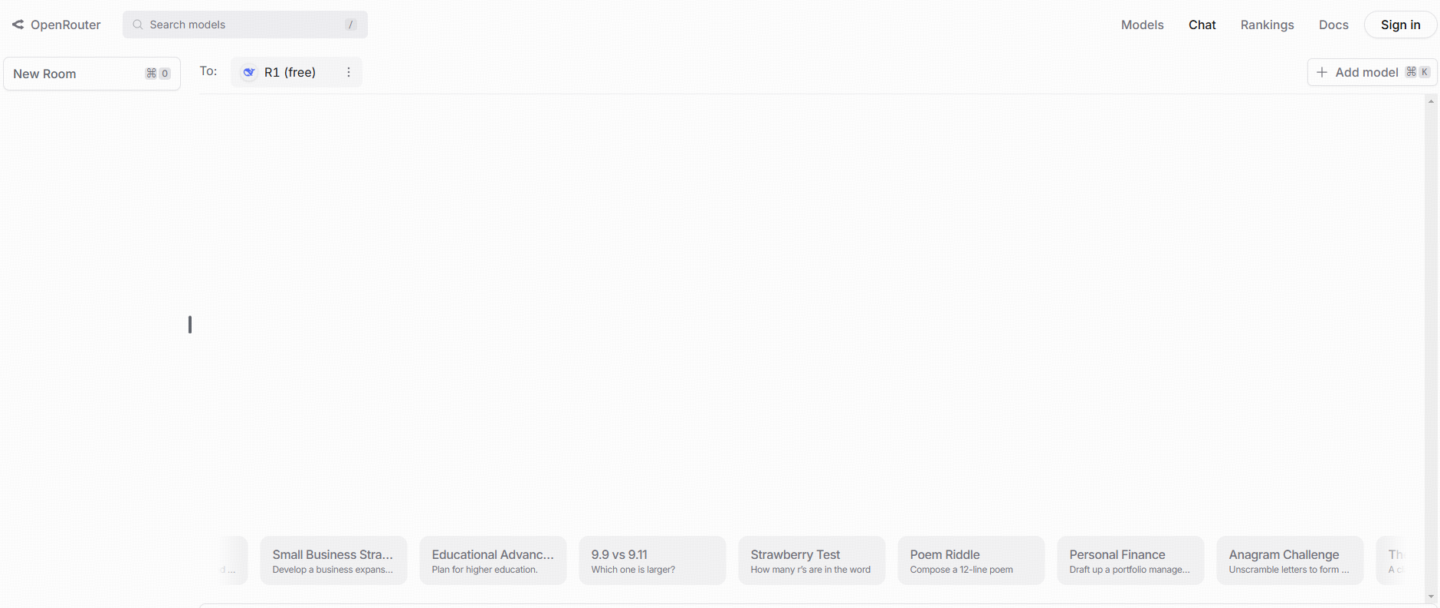

15 OpenRouter: a centralized platform that provides access to DeepSeek

OpenRouter serves as a centralized hub, granting users convenient access to a diverse ecosystem of cutting-edge AI models. This comprehensive platform incorporates a wide array of powerful AI solutions, such as DeepSeek, empowering users to seamlessly explore and leverage the strengths of different models for their specific needs and applications.

What is DeepSeek?

DeepSeek is an AI development firm headquartered in Hangzhou, China, that has made significant waves in the global artificial intelligence landscape. Founded with the vision of pushing the boundaries of AI capabilities, DeepSeek is renowned for its cutting-edge large language models (LLMs) that combine advanced reasoning, problem-solving, and versatile applications across multiple industries. Much like other AI giants such as OpenAI and Anthropic, DeepSeek has rapidly gained attention from developers, researchers, and businesses due to its highly competitive and cost-effective solutions.

DeepSeek’s Models: V3 and R1

DeepSeek has released several iterations of its AI models, with the most notable being V3 and R1.

- V3 is a general-purpose model that is designed to handle a wide variety of tasks, making it highly adaptable and efficient for use in different domains such as content generation, data analysis, customer service, and more. With its broad scope, V3 aims to serve as an all-in-one tool for businesses and individuals alike, capable of processing both simple and complex tasks.

- R1, on the other hand, is a reasoning-focused model that excels in tasks requiring deeper cognitive processing. This model was developed to push the boundaries of problem-solving and complex decision-making, making it particularly valuable for industries that rely on nuanced data interpretation and advanced reasoning, such as scientific research, financial analysis, and technical problem-solving.

Key Features of DeepSeek

DeepSeek’s models have several standout features that set them apart from other AI platforms. These include:

- 671 Billion Parameters: DeepSeek’s V3 and R1 models are built on an impressive 671 billion parameters, enabling them to process vast amounts of information and deliver highly accurate and relevant outputs. This massive scale allows the AI to understand and generate content with greater context and precision than many of its competitors.

- 128,000 Token Context Length: One of the most powerful features of DeepSeek’s models is their 128,000 token context length, which allows for extended conversations and document analysis. Unlike many other models that are limited in the amount of text they can process at once, DeepSeek’s models can handle long-form content, making them ideal for tasks like research, data analysis, and content summarization.

- Strong Performance in Mathematical Reasoning and Problem-Solving: DeepSeek’s AI has demonstrated exceptional abilities in mathematical reasoning and problem-solving, often outperforming other models in this area. This strength is particularly useful for applications that require complex calculations, logical analysis, or optimization, such as financial forecasting, algorithm development, or engineering tasks.

- Versatile Applications Across Industries: DeepSeek’s models are highly versatile, offering applications across a wide range of sectors, including:

- Healthcare: Assisting with data analysis, medical imaging, and diagnostic support.

- Finance: Streamlining risk analysis, fraud detection, and financial modeling.

- Smart Cities: Optimizing traffic management, urban planning, and energy consumption.

- Research: Aiding in scientific discoveries, technical problem-solving, and academic endeavors.

DeepSeek’s commitment to providing advanced, adaptable solutions has made it a valuable tool for industries that require both general and specialized AI capabilities. Its ability to balance performance with cost-effectiveness has also contributed to its growing popularity in the AI space.

Conclution

These DeepSeek alternatives each offer unique services, not only solving the “server busy” issue on the DeepSeek official website but also providing a variety of options for users with different needs.

I hope this article helps you find the most suitable solution for your use, and feel free to share your experiences with these platforms in the comments section.